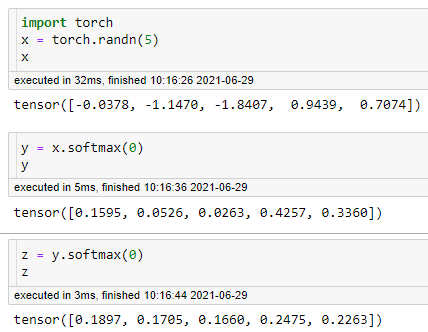

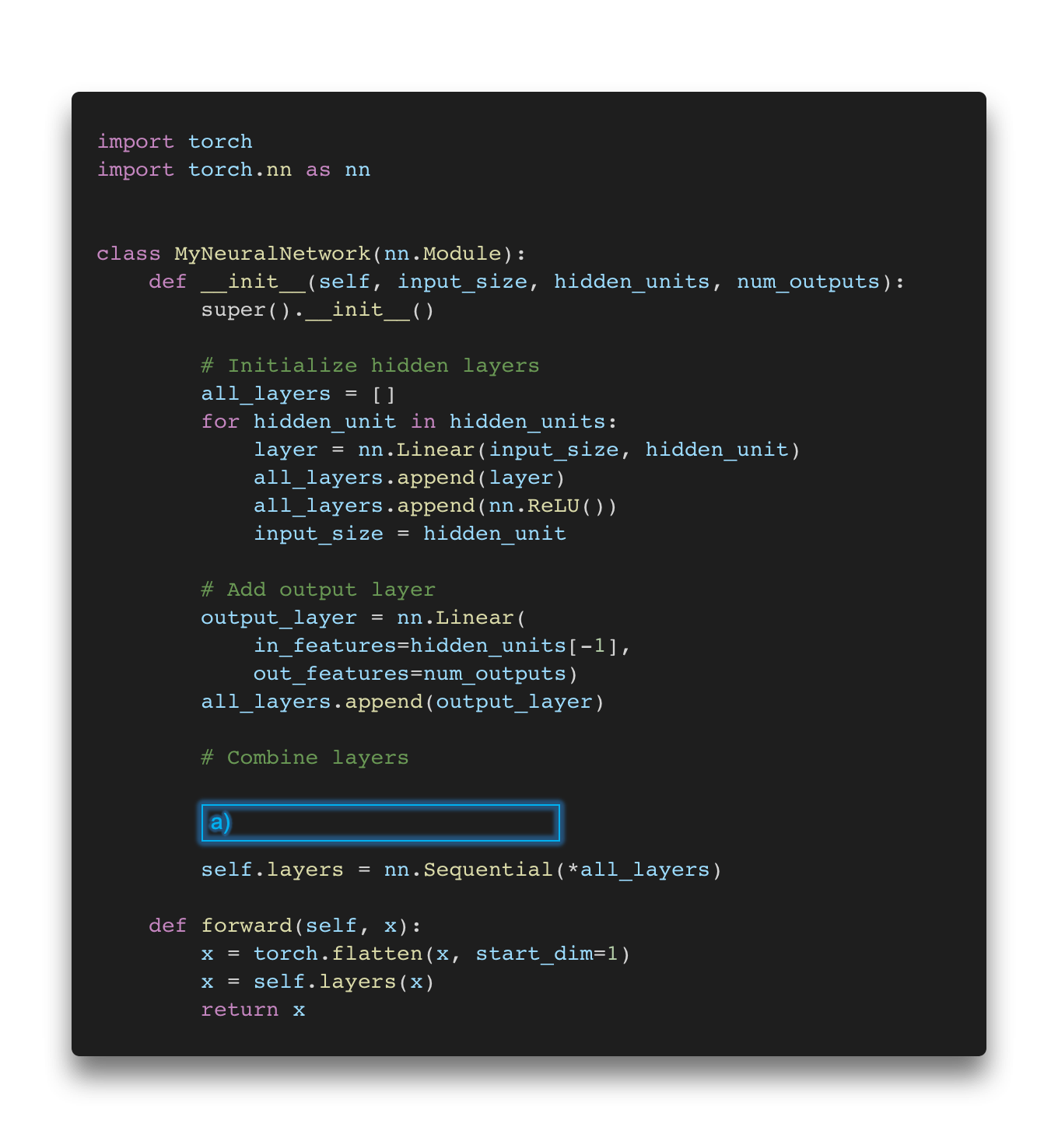

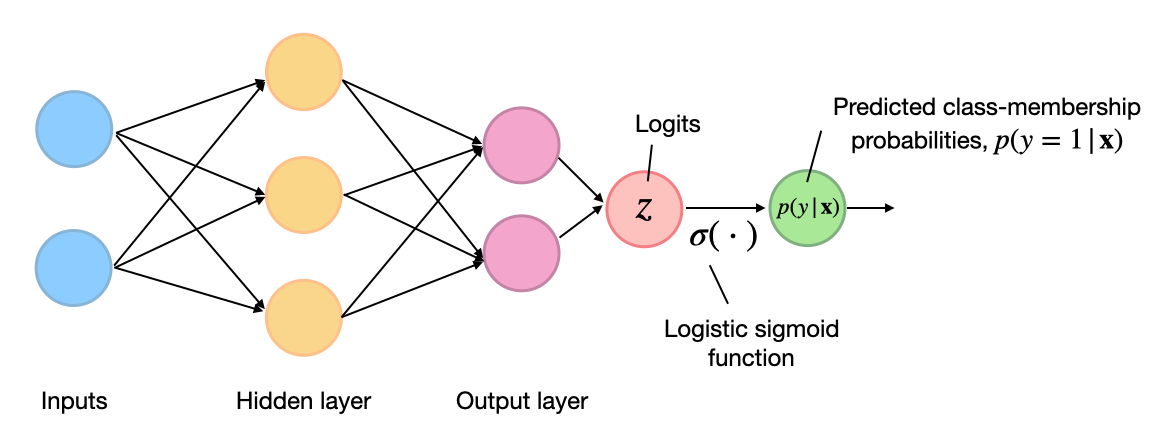

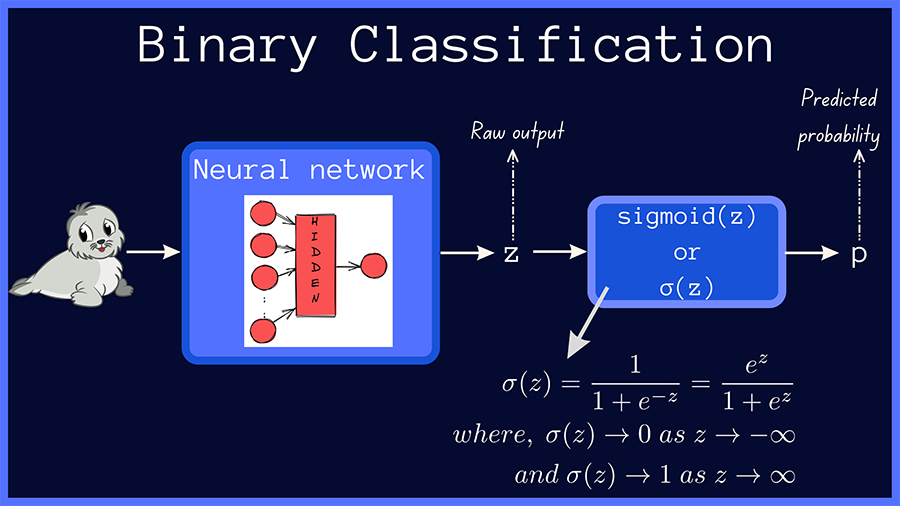

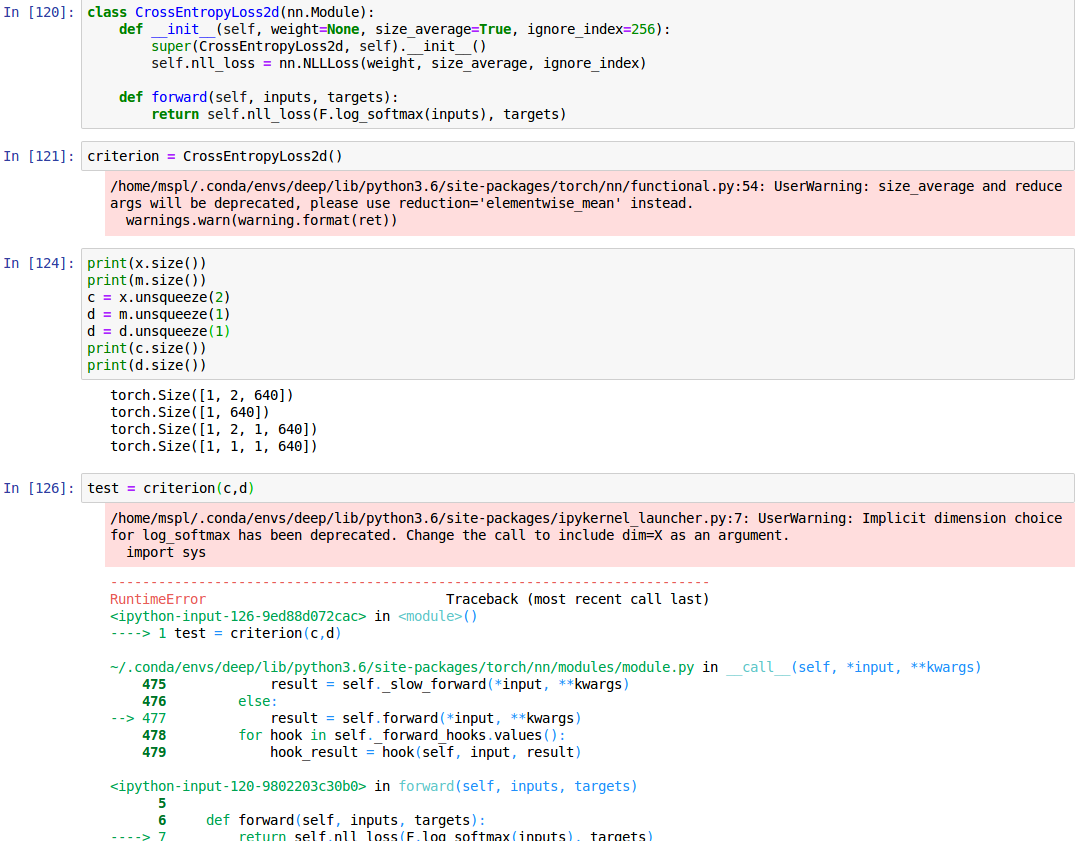

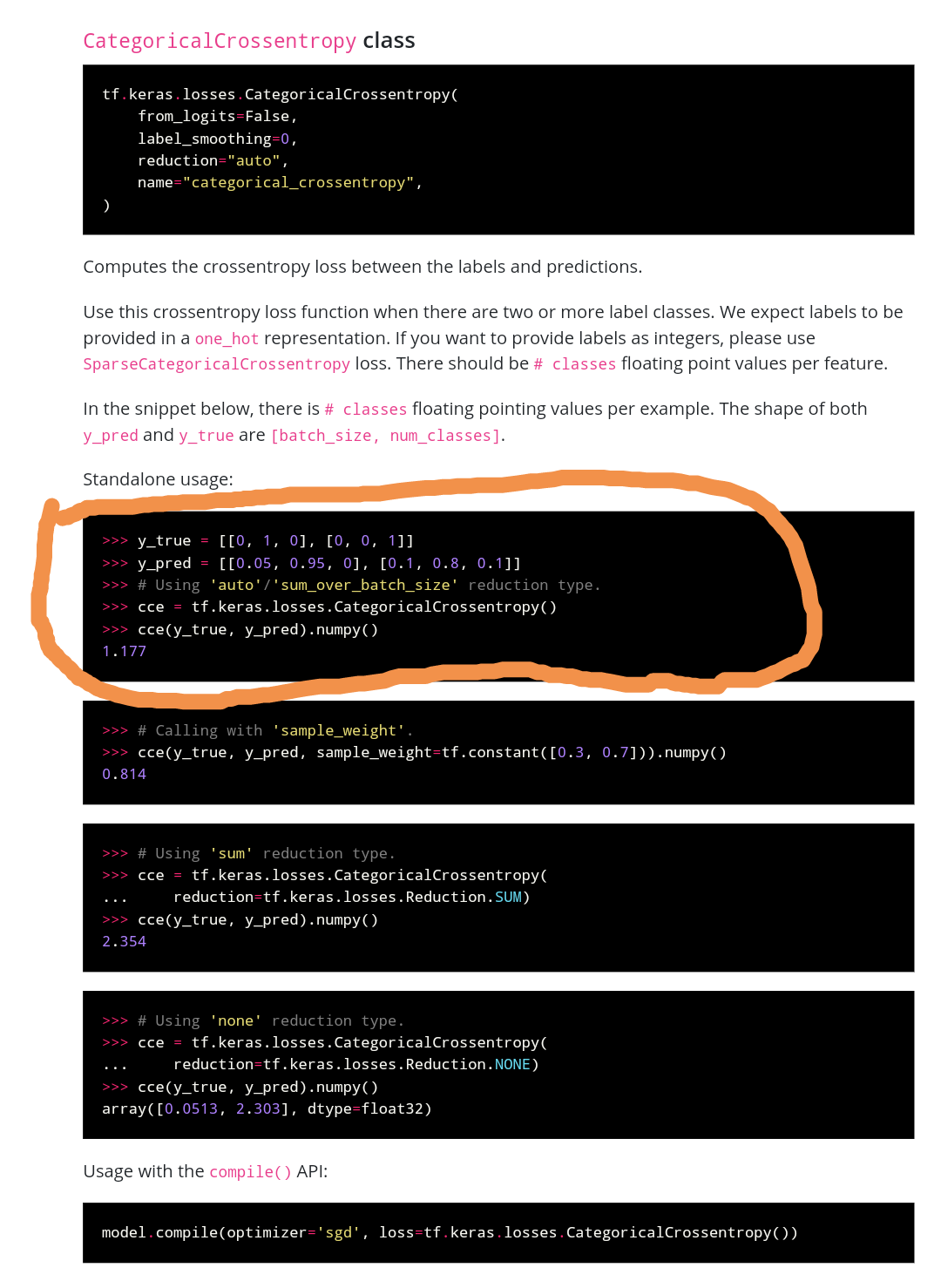

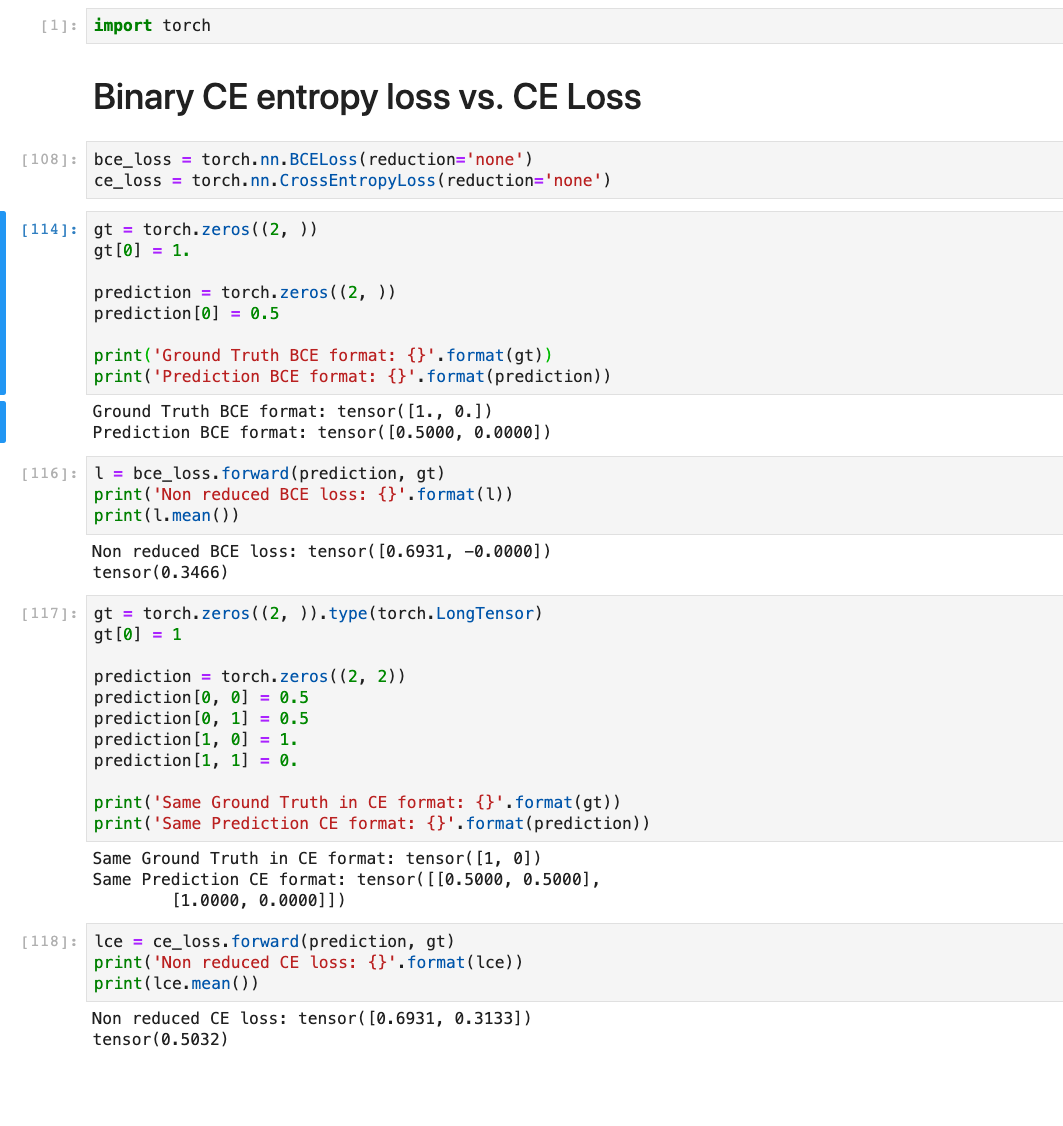

Why Softmax not used when Cross-entropy-loss is used as loss function during Neural Network training in PyTorch? | by Shakti Wadekar | Medium

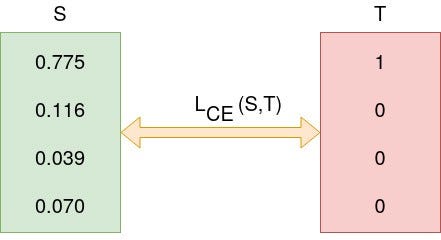

machine learning - Cross Entropy in PyTorch is different from what I learnt (Not about logit input, but about the loss for every node) - Cross Validated

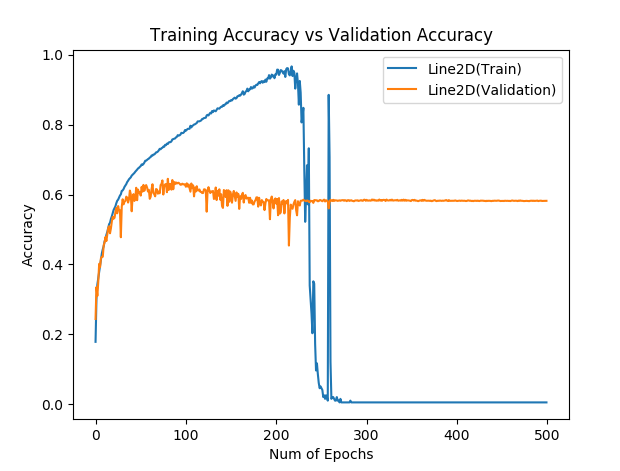

Hinge loss gives accuracy 1 but cross entropy gives accuracy 0 after many epochs, why? - PyTorch Forums