Visualization via t-SNE 3D embedding of 500 clips (each clip is a point... | Download Scientific Diagram

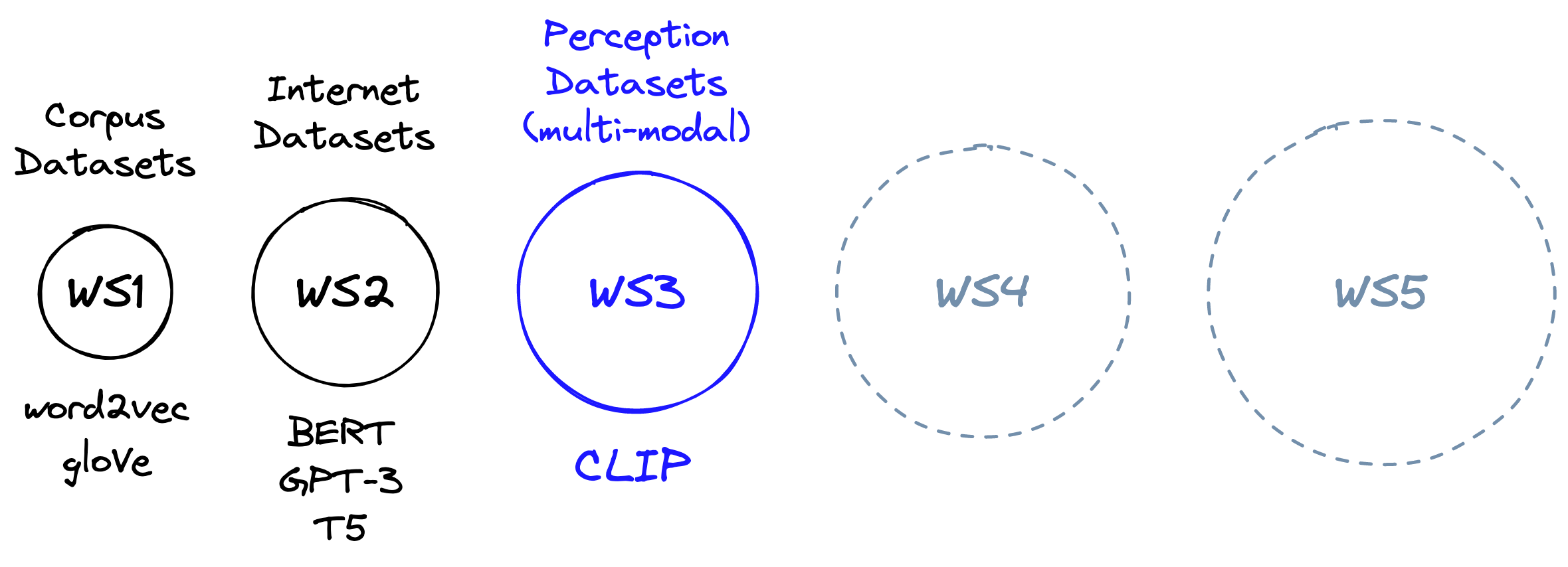

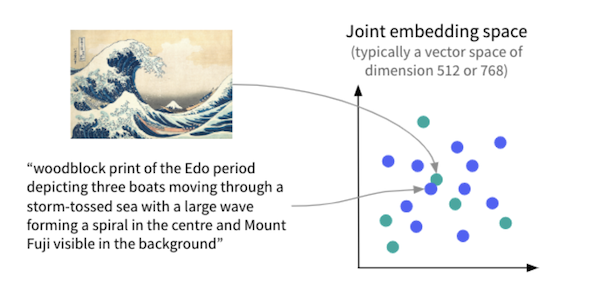

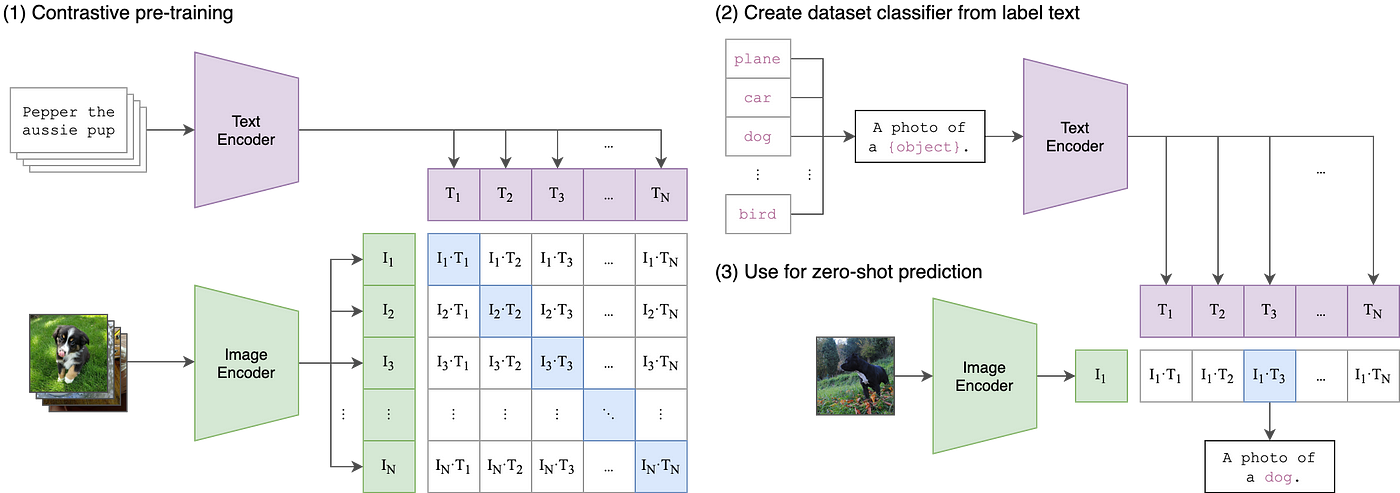

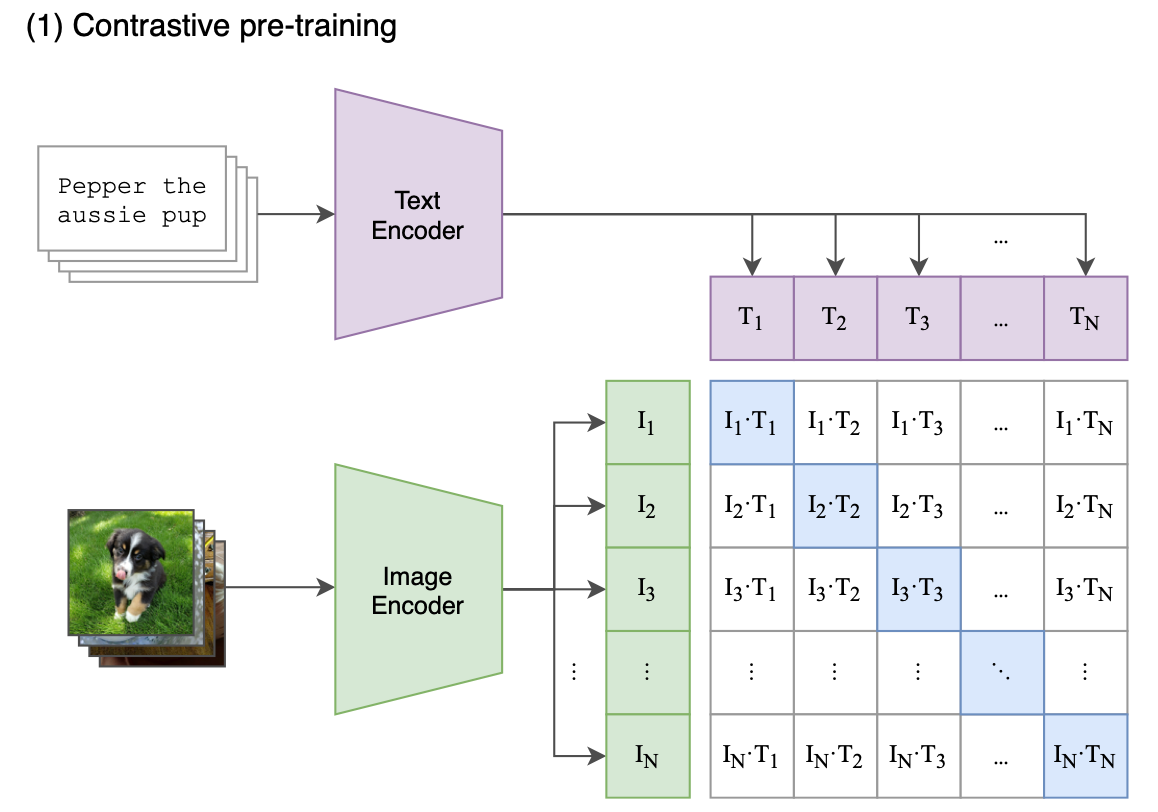

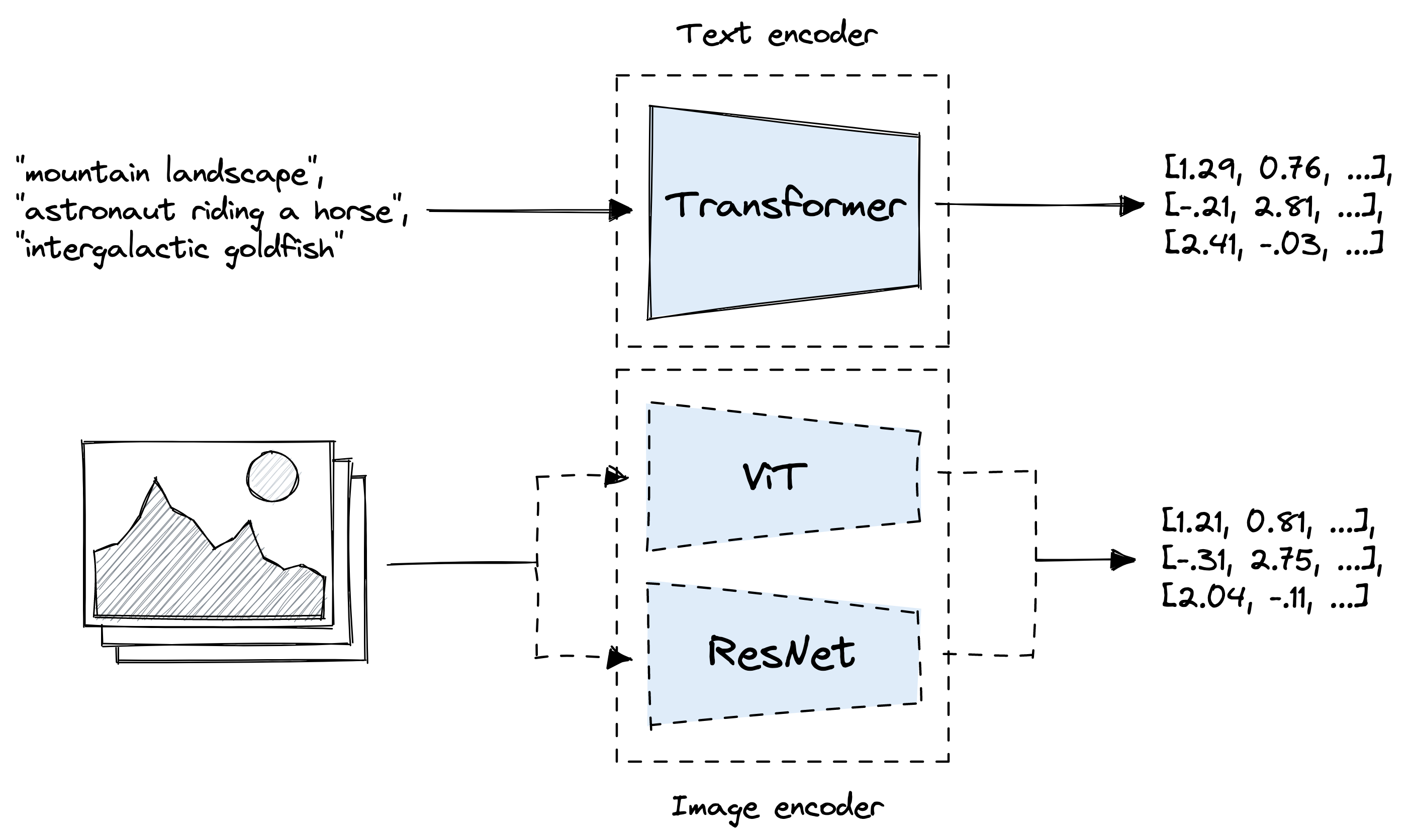

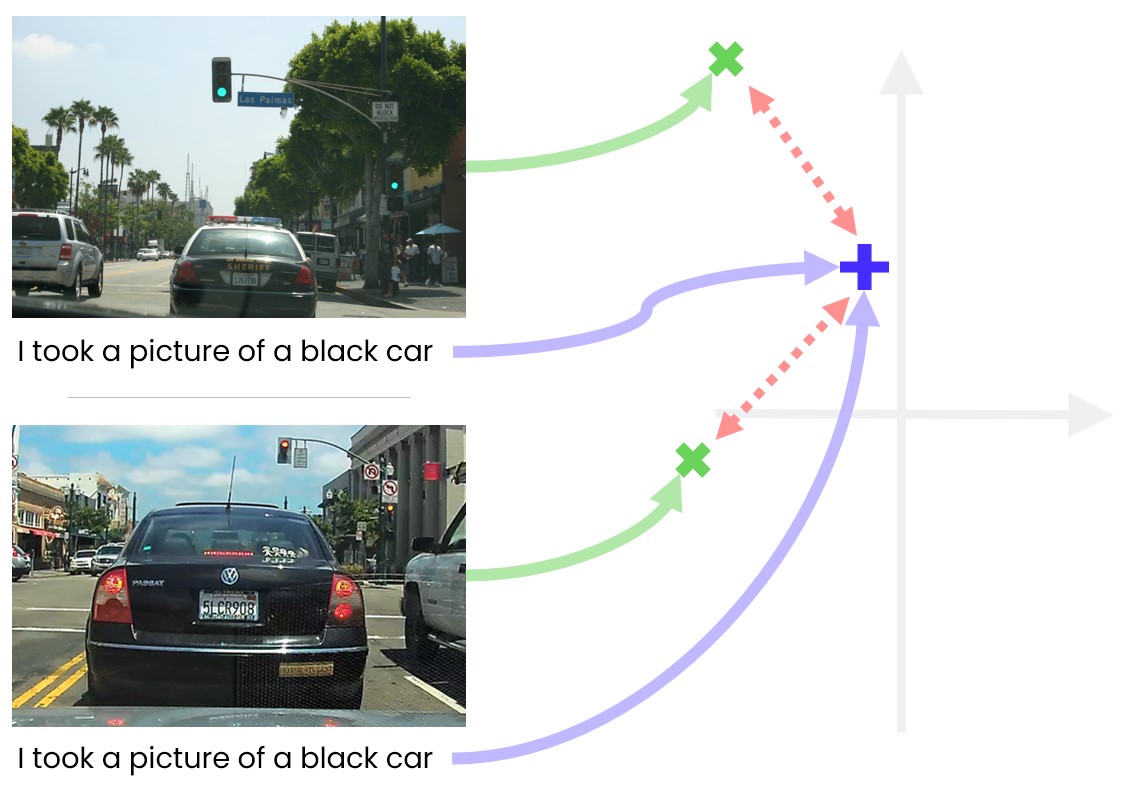

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

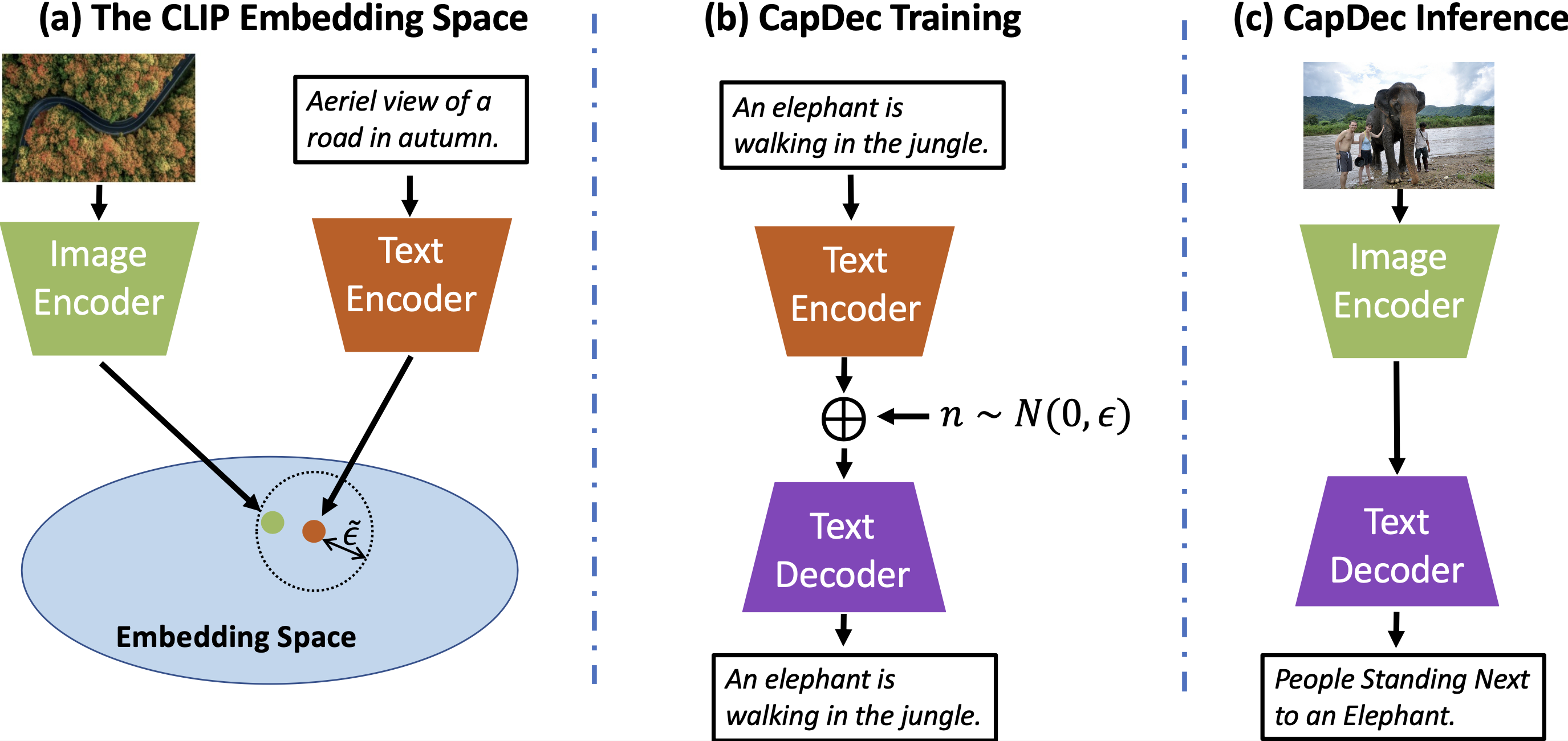

GitHub - DavidHuji/CapDec: CapDec: SOTA Zero Shot Image Captioning Using CLIP and GPT2, EMNLP 2022 (findings)

Incorporating natural language into vision models improves prediction and understanding of higher visual cortex | bioRxiv

Perceptual Reasoning and Interaction Research - Simple but Effective: CLIP Embeddings for Embodied AI

A 2D embedding of clip art styles, computed using t-SNE, shown with "... | Download Scientific Diagram

Raphaël Millière on Twitter: "CLIP only needs to learn visual features sufficient to match an image with the correct caption. As a result, it's unlikely to preserve the kind of information that