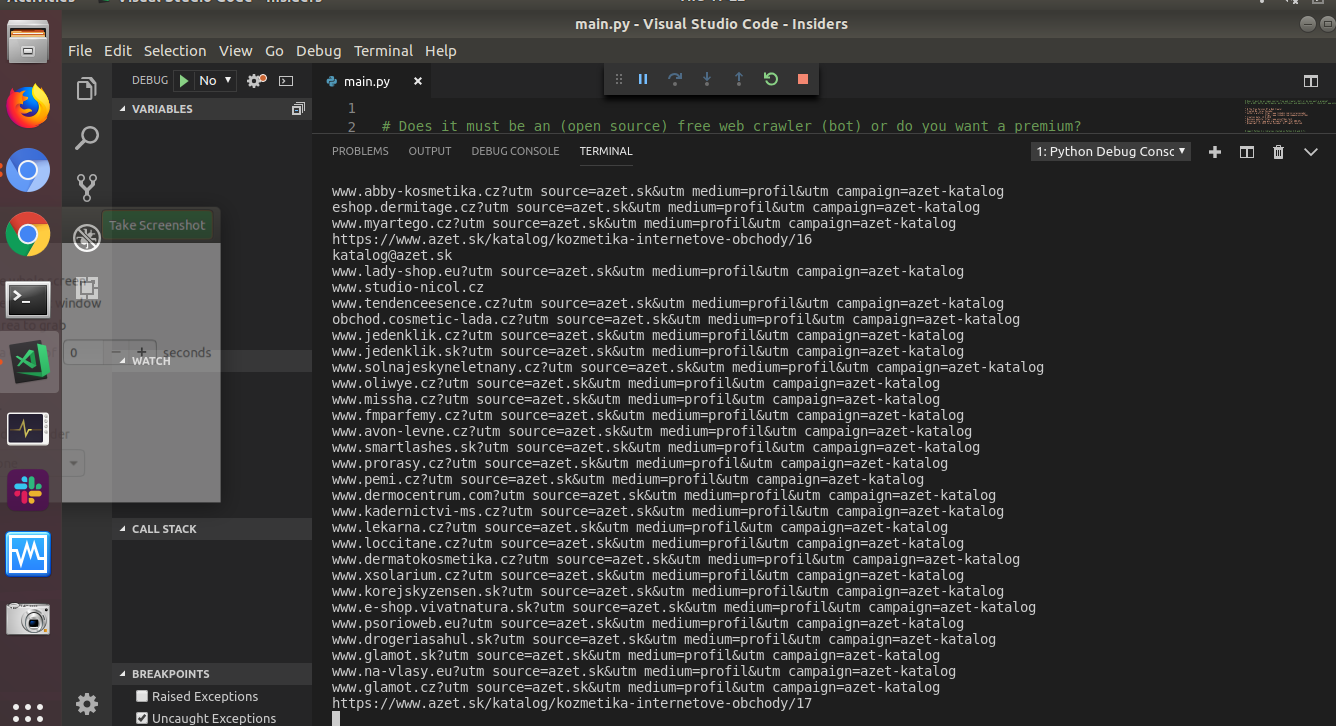

GitHub - p4u/domaincrawler: It is an HTTP crawler which looks for domains in <a> tags and sotres them into a sqlite database next to their IP address. it works recursively among the

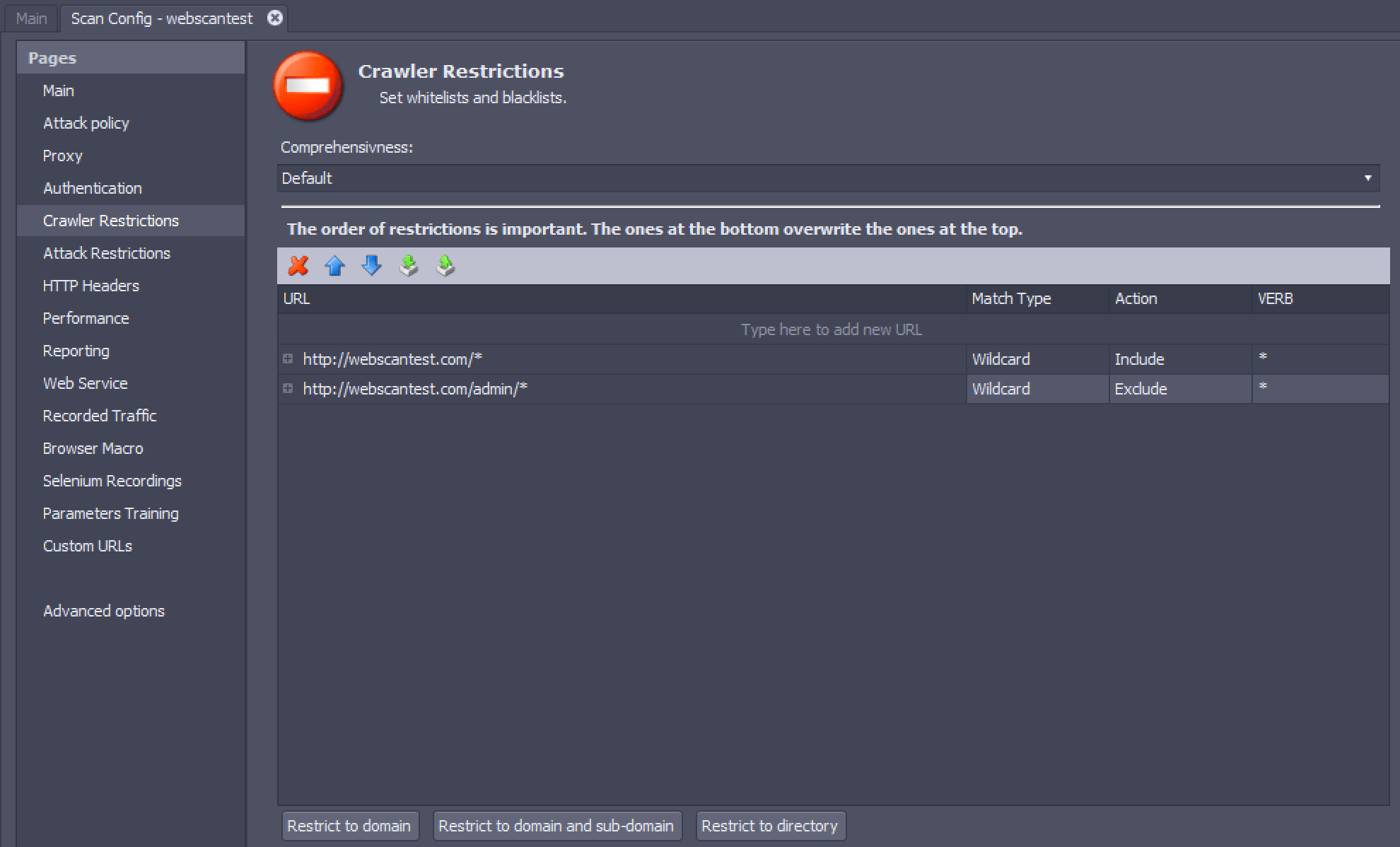

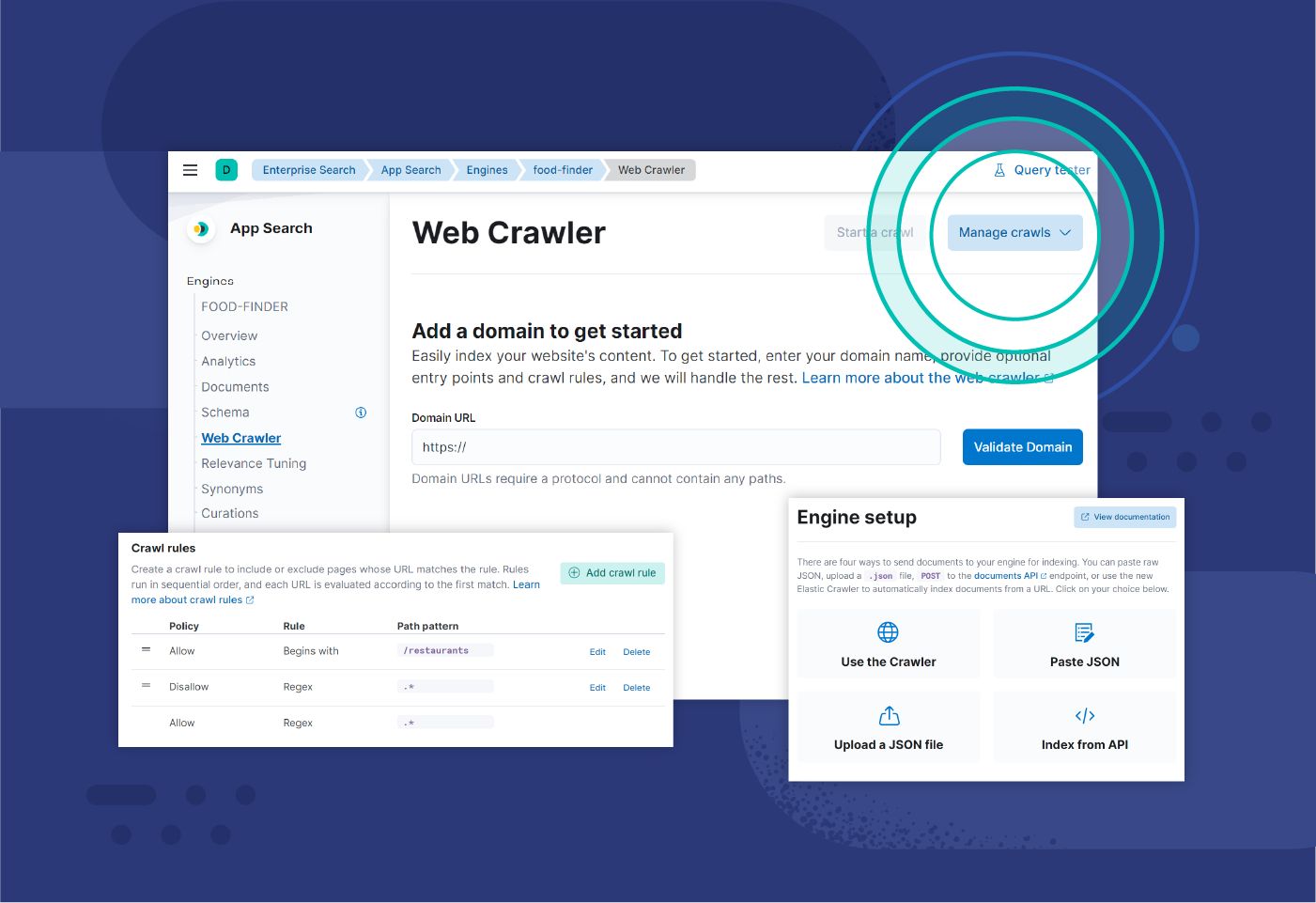

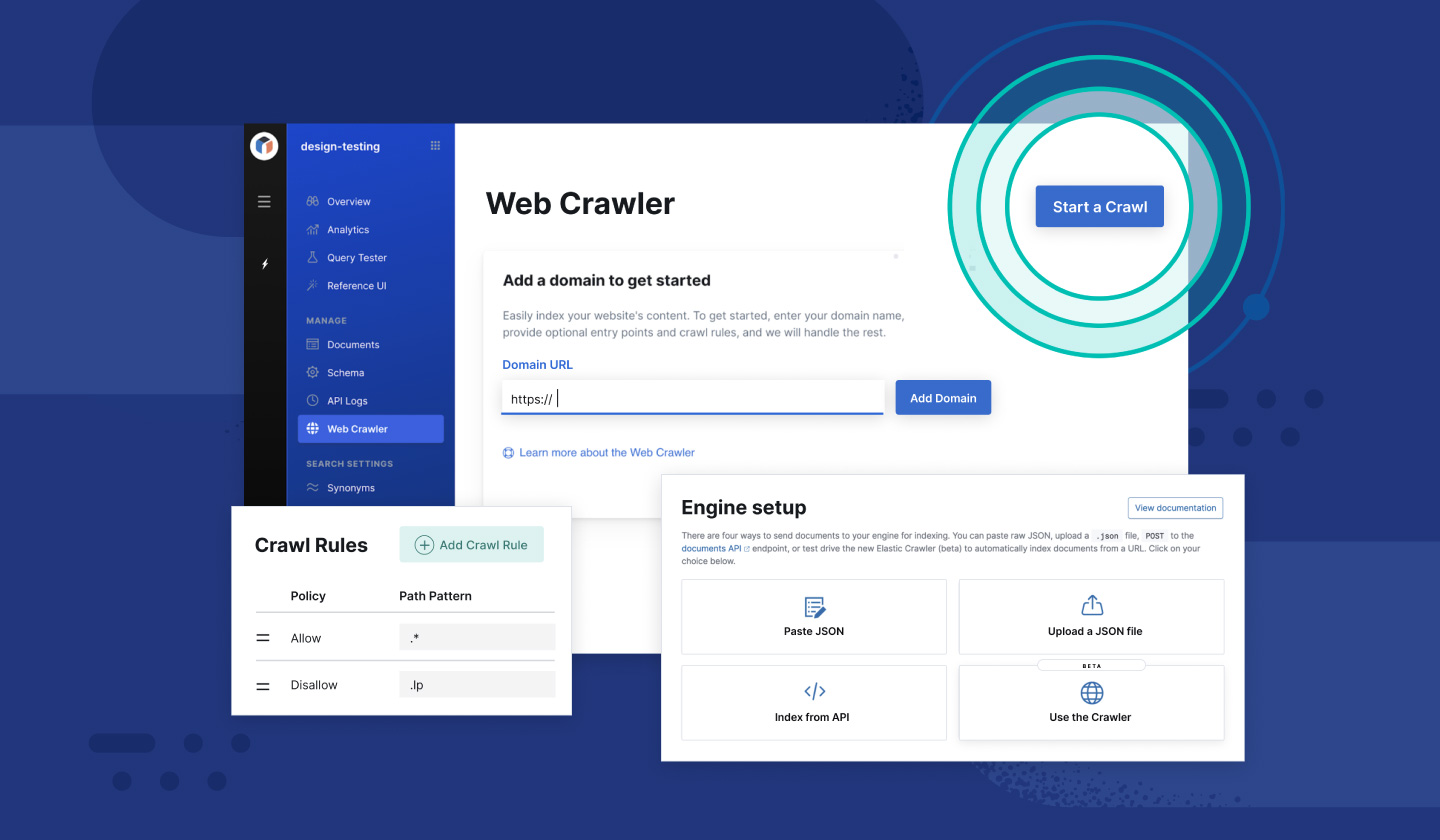

![Crawl a private network using a web crawler on Elastic Cloud | Enterprise Search documentation [8.7] | Elastic Crawl a private network using a web crawler on Elastic Cloud | Enterprise Search documentation [8.7] | Elastic](https://www.elastic.co/guide/en/enterprise-search/current/images/crawler-proxy-validation.png)

Crawl a private network using a web crawler on Elastic Cloud | Enterprise Search documentation [8.7] | Elastic